Introduction

Ever had that moment when your AI just gets what you’re asking for without any examples? That’s not magic it’s-zero-shot learning at work, and it’s changing everything about how machines understand us.

Machine learning used to be like teaching a toddler: show them the same thing repeatedly until they finally get it. But zero-shot learning insights are flipping the script entirely.

What if I told you that today’s AI can classify objects it’s never seen before, translate languages it wasn’t explicitly trained on, and generate content in styles it’s never encountered? That’s the power of transferable knowledge and it’s reshaping the future of AI development.

1. Fundamentals of Zero-Shot Learning

1.1 Key concepts and definitions

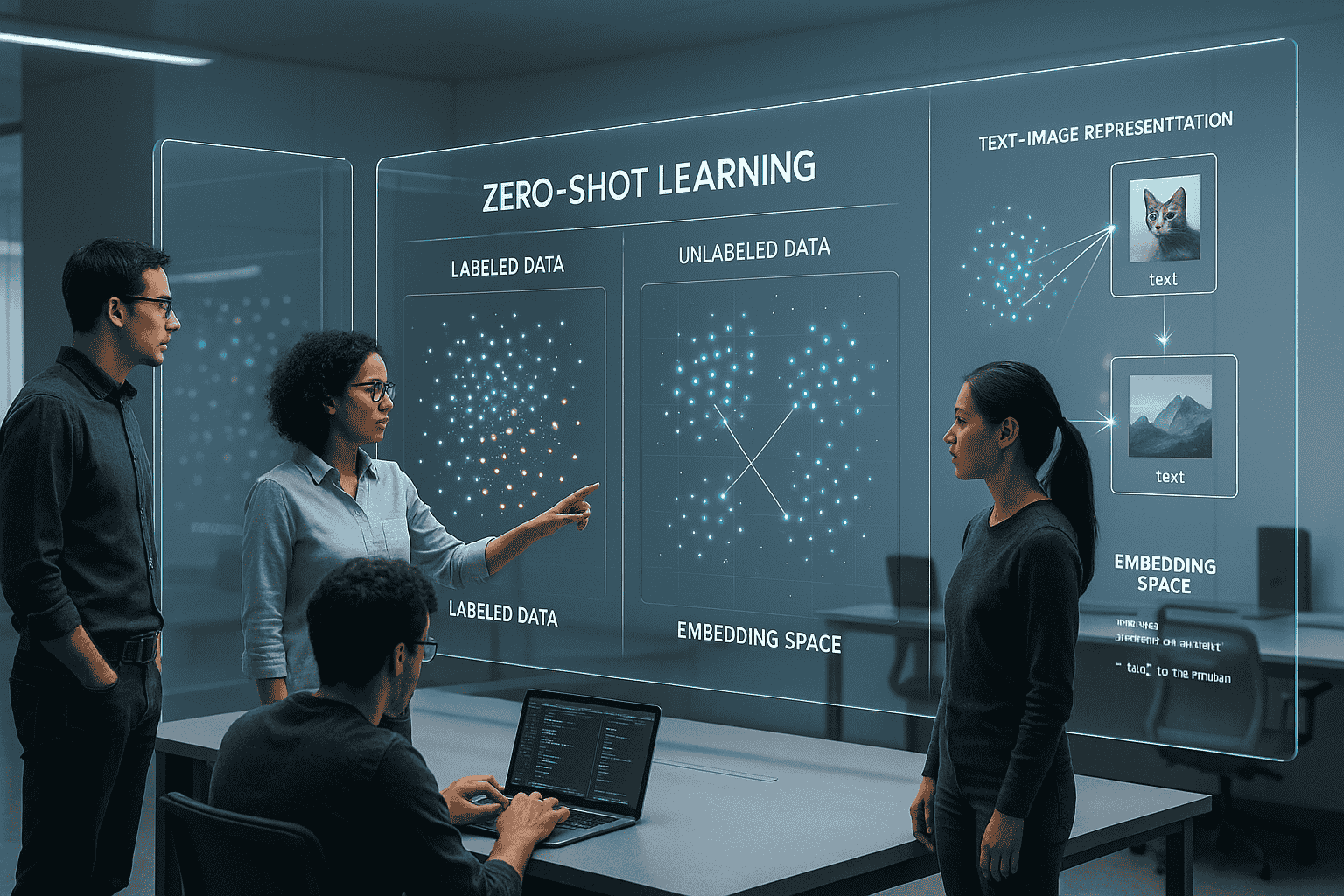

Zero-shot learning is essentially machine learning with training wheels off. It’s the art of making predictions about things your model has never seen before.

Think about it – humans do this all the time. You’ve never seen a purple elephant wearing roller skates, but you can imagine what it looks like because you know what elephants, purple things, and roller skates are.

At its core, zero-shot learning relies on transferable knowledge. The model learns to connect semantic attributes to visual features, allowing it to recognize new classes without specific training examples.

1.2 How it differs from other machine learning approaches

Traditional machine learning is like studying for a specific test – you know exactly what questions will be asked.

Zero-shot learning? That’s more like preparing for anything life might throw at you.

1.3 Comparison of Learning Paradigms

| Learning Paradigm | Training Data | Test Classes | Key Difference |

|---|---|---|---|

| Traditional ML | Classes A, B, C | Classes A, B, C | Seen classes only |

| Few-shot learning | Classes A, B, C | Classes D, E (with a few examples) | Limited examples of new classes |

| Zero-shot learning | Classes A, B, C | Classes D, E (no examples) | No examples of new classes |

The magic happens through semantic spaces – while traditional models can only recognize what they’ve been explicitly trained on, zero-shot models can make connections between seen and unseen concepts.

1.4 Historical development and breakthroughs

Zero-shot learning didn’t appear overnight. The concept started gaining traction around 2008-2009 when researchers began exploring how to make models more flexible.The real game-changer came in 2013 when researchers started using word embeddings (like Word2Vec) to bridge the gap between linguistic knowledge and visual recognition.Fast forward to 2017, and we saw generalized zero-shot learning frameworks emerge, capable of recognizing both seen and unseen classes simultaneously.

The field exploded with the introduction of large language models. GPT and CLIP demonstrated unprecedented zero-shot capabilities across multiple domains – a massive leap forward.

1.5 Core technical mechanisms

So how does this magic actually work? Zero-shot learning typically relies on three key mechanisms:

- Attribute-based learning: Models learn visual attributes (like “striped” or “has wings”) that can be combined in new ways.

- Embedding spaces: By mapping both images and class descriptions into the same vector space, models can measure similarity between unseen classes and known visual features.

- Knowledge graphs: Some approaches use structured knowledge to understand relationships between concepts, helping models make logical leaps to new classes.

The cornerstone is finding that common ground between what the model knows and what it needs to predict. Modern approaches often use transformers to create these rich semantic spaces where new concepts can be understood through their relationship to familiar ones.

2. Real-World Applications

2.1 Natural Language Processing Implementation

Zero-shot learning is revolutionizing NLP by enabling models to understand tasks they’ve never seen before. Unlike traditional models that require extensive training data for each task, zero-shot approaches can classify text into new categories without specific examples.

GPT models are prime examples. They can answer questions, translate languages, and summarize content without task-specific training. Imagine asking a model to classify customer feedback into categories it’s never been explicitly taught – that’s zero-shot learning in action.

Companies are implementing this to build more flexible chatbots that can handle unexpected user queries without breaking down. The magic happens through prompt engineering – crafting instructions that guide the model to perform new tasks on the fly.

2.2 Computer Vision Use Cases

The computer vision world is going wild with zero-shot capabilities. CLIP (Contrastive Language-Image Pre-training) by OpenAI can identify objects it’s never been explicitly trained on just by understanding the relationship between images and text descriptions.Retail companies use zero-shot vision models to recognize new products without retraining their systems. Security applications can detect unusual activities without needing examples of every possible threat. A particularly cool application? Museums using zero-shot models to identify and catalog artifacts without needing specialized models for each historical period or object type.

2.3 Healthcare Diagnostics

Healthcare is perhaps the most promising frontier for zero-shot learning. Radiologists are using systems that can identify rare conditions even when training data is scarce.One breakthrough application helps doctors spot unusual patterns in medical images that might indicate rare diseases – even when the system wasn’t specifically trained on those conditions. This is game-changing for diagnosing orphan diseases that affect too few people to generate large training datasets.Drug discovery teams are employing zero-shot approaches to predict how new molecular compounds might interact with various proteins, dramatically speeding up the initial screening process.

2.4 Financial Forecasting

Financial analysts are embracing zero-shot learning to detect market anomalies and predict trends for new assets with limited historical data.

Trading algorithms now adapt to emerging market conditions without requiring complete retraining. They can transfer knowledge from familiar patterns to novel situations – like recognizing signs of market stress in previously unseen combinations of indicators.

Fraud detection systems powered by zero-shot learning can identify new scam patterns by understanding the conceptual similarities to known fraudulent activities, staying ahead of criminals who constantly evolve their tactics.

2.5 Recommendation Systems

The recommendation engine space is being transformed by zero-shot capabilities. Streaming services can now recommend new content without the “cold start” problem that typically happens when a new show or movie lacks user interaction data.E-commerce platforms are using zero-shot recommenders to suggest products from new categories based on abstract features rather than just historical purchases. This creates more serendipitous discovery experiences for shoppers.The most advanced systems can even understand customer preferences across completely different domains – recommending books based on movie tastes or suggesting music based on art preferences – all without explicit training on these cross-domain relationships.

3. Advantages of Zero-Shot Learning

3.1 Reducing dependency on labeled data

Labeled data is the bottleneck that keeps most AI projects stuck in the mud. We’ve all been there – spending weeks manually tagging thousands of images or sentences just to train a decent model. Zero-shot learning flips this scenario on its head.

With zero-shot approaches, your models can recognize things they’ve never explicitly seen before. Think about it – if you know what a zebra and a horse look like, you can probably identify a mule without anyone having to label it for you. Zero-shot learning works the same way.

The beauty here is obvious. You save hundreds of hours of expensive annotation work. Your data scientists can focus on solving real problems instead of babysitting labeling tasks. And you can deploy models faster because you’re not waiting for that perfect dataset to materialize.

3.2 Scalability across domains

Domain barriers are crumbling thanks to zero-shot learning. Traditional models get confused when you move them from one context to another, but zero-shot models just roll with it.

A zero-shot model trained on medical texts can suddenly understand legal documents without specific retraining. That’s game-changing for businesses working across multiple sectors.

This cross-domain flexibility means you build once, deploy everywhere. No more custom models for every little project. The cost savings alone make zero-shot approaches worth investigating.

3.3 Adaptability to new tasks

Zero-shot learning shines brightest when facing tasks it wasn’t explicitly trained for.

Say you built a product categorization system, but now suddenly need to classify customer sentiment. With traditional approaches, you’d start from scratch. With zero-shot learning, your existing model can often handle the new challenge immediately.

This adaptability means your AI infrastructure becomes future-proof. As business needs evolve, your models evolve with them, no retraining required.

The real-world impact is dramatic: faster innovation cycles, lower maintenance costs, and the ability to say “yes” to new use cases that would previously take months to implement.

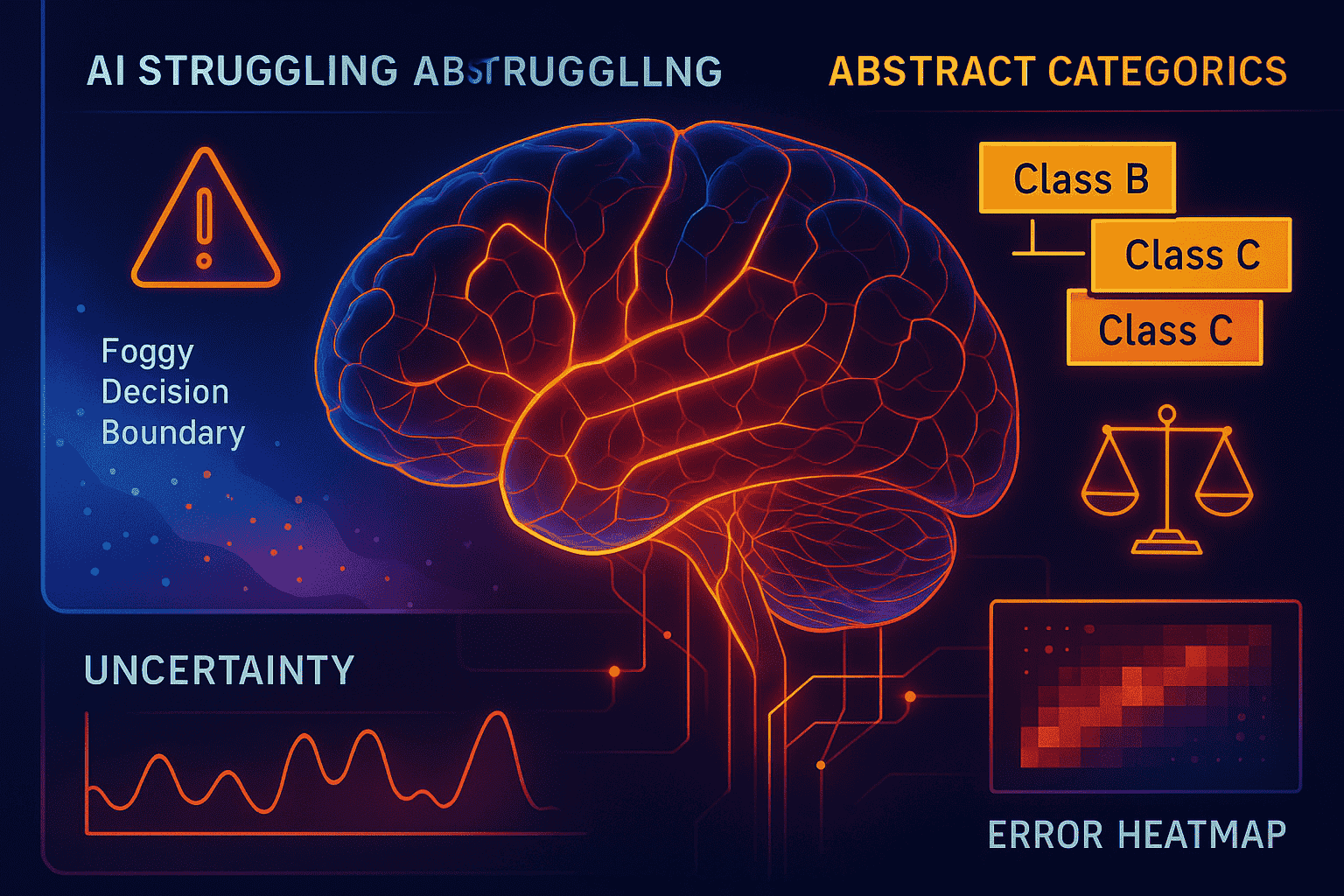

4. Challenges and Limitations

4.1 Performance trade-offs versus supervised learning

Zero-shot learning is cool, but let’s not kid ourselves – it comes with some serious trade-offs when compared to traditional supervised approaches.The accuracy gap is real. When you have tons of labeled data, supervised models will almost always outperform zero-shot alternatives. It’s like comparing a rookie who’s never seen a basketball game to LeBron James. The rookie might surprise you occasionally, but consistency? Not even close.The computational demands hit differently too. Zero-shot models need to be much larger to capture those cross-domain relationships. We’re talking bigger models, more parameters, and heavier inference costs.

4.2 Domain adaptation difficulties

Zero-shot learning stumbles hard when the source and target domains don’t play nice together.

The semantic gap problem is a killer. If your model was trained on product reviews but you’re trying to classify medical texts, good luck! The vocabulary shift alone can tank your performance.

Distribution shifts wreak havoc too. Your model trained on perfect studio photos will fall apart when handed grainy surveillance footage. The visual characteristics are just too different.

Comparison: Supervised Learning vs. Zero-Shot Learning

| Aspect | Supervised Learning | Zero-Shot Learning |

|---|---|---|

| Accuracy | Higher on in-domain tasks | Lower but more flexible |

| Data Requirements | Lots of labeled examples | No task-specific labels |

| Model Size | Can be relatively compact | Typically much larger |

| Inference Speed | Usually faster | Often slower |

4.4 Model interpretability issues

Try explaining why your zero-shot model made a particular prediction. Go ahead, I’ll wait.

That’s the problem. The black-box nature of these models makes them incredibly difficult to interpret. The knowledge transfer mechanisms happening under the hood are complex and often opaque.

This lack of transparency creates real problems:

- Debugging becomes a nightmare

- Performance failures are harder to diagnose

- Building trust with stakeholders gets complicated

4.5 Bias and fairness considerations

Zero-shot models inherit all the biases from their training data and then some. Since they’re trained on massive, often web-scraped datasets, they absorb all kinds of problematic associations.

What’s worse, these biases can manifest in surprising ways when the model encounters new domains. A seemingly fair model might suddenly produce biased outputs when applied to a different context.

The evaluation challenge compounds this issue. How do you test for fairness across all possible future applications? You can’t. This makes mitigating bias in zero-shot learning particularly tricky and concerning.

5. Building Effective Zero-Shot Models

5.1 Architectural considerations

Building zero-shot models isn’t like baking a cake with a recipe. You’ve got to think differently about your neural architecture.

The backbone matters—a lot. Transformers have crushed it in this space because they handle the semantic relationships between words and concepts that zero-shot learning depends on. But it’s not just about slapping together the biggest transformer you can find.

What you really need is an architecture that creates a shared embedding space where both seen and unseen classes can mingle effectively. Dual-encoder setups work wonders here—one pathway for your input data, another for your class descriptions.

5.2 Feature representation strategies

The secret sauce of zero-shot learning? How you represent your features.

Good feature spaces need to be:

- Dense with meaningful information

- Transferable across domains

- Semantically organized

Visual-semantic embeddings are game-changers. They map images and text into the same vector space, letting your model connect dots it’s never explicitly seen before.

Try this: instead of single-point embeddings, work with distributional representations. They capture uncertainty better, which is gold when you’re asking your model to recognize stuff it’s never seen.

5.3 Prompt engineering techniques

Prompts are your zero-shot model’s GPS. Bad directions, bad results.

Think about these prompt strategies:

- Chain-of-thought prompting (walk the model through reasoning steps)

- Few-shot examples within your zero-shot prompt (yes, that’s a thing)

- Contrastive prompting (what it is vs. what it isn’t)

The magic happens when you provide context without giving away the answer. That’s the balancing act.

5.4 Model selection criteria

Picking the right model isn’t about chasing state-of-the-art benchmarks.

Focus on:

- Generalization capabilities over raw performance

- Robustness to distribution shifts

- Calibration (does it know when it doesn’t know?)

- Inference efficiency (because production environments aren’t research playgrounds)

The best zero-shot models often aren’t the ones with the most parameters but the ones trained on diverse data with thoughtful objectives.

5.5 Evaluation methodologies

Traditional evaluation metrics fall apart with zero-shot models. Accuracy alone won’t cut it.

You need to test:

- Harmonic mean accuracy between seen and unseen classes

- Area under the seen-unseen curve (AUSUC)

- Generalized zero-shot metrics that consider both worlds

Cross-domain evaluation is non-negotiable. If your medical image classifier only works on one hospital’s equipment, you don’t have zero-shot learning—you have overfitting with extra steps.

6. Future Directions

6.1 Emerging research trends

Zero-shot learning isn’t slowing down – it’s exploding in new directions. Right now, researchers are obsessed with making models more robust against distribution shifts. Think about it: most current systems break when they encounter data that looks different from what they’ve seen before.

Some teams are diving into neuro-symbolic approaches, combining the pattern recognition of neural networks with the reasoning capabilities of symbolic AI. This hybrid approach might be the key to handling truly novel scenarios.

Another hot trend? Self-supervised learning techniques that help models build better representations without human labeling. These approaches are creating more flexible feature spaces where zero-shot transfers actually work.

6.2 Integration with few-shot learning

The lines between zero-shot and few-shot are blurring. The most promising systems now combine both approaches dynamically.

Imagine a system that starts with zero-shot attempts but can gracefully adapt when given just a handful of examples. This creates a continuous learning spectrum rather than distinct categories.

Some frameworks are implementing what they call “adaptive shots” – where the system determines how many examples it needs based on the task difficulty. For complex tasks, it might request more examples, while simpler ones work fine with zero.

6.3 Multimodal zero-shot approaches

The real magic happens when zero-shot jumps between different types of data. Systems that can connect text, images, audio, and video without explicit training for cross-modal tasks are changing the game.

Recent architectures like CLIP and DALL-E showed us what’s possible when models understand the relationships between images and text. But that’s just the beginning.

The next wave focuses on incorporating more senses – touch, spatial awareness, and even physical interactions. Imagine robots that can perform tasks they’ve only read about in manuals.

6.4 Edge computing implementations

Zero-shot learning is breaking free from massive data centers. New compression techniques and model distillation are bringing these capabilities to phones, IoT devices, and embedded systems.

On-device zero-shot learning means privacy-preserving intelligence that works even without internet connections. Your smart home devices could recognize activities they were never explicitly programmed to detect.

The efficiency challenges are significant – how do you pack enough knowledge into a tiny model to enable meaningful zero-shot transfers? Quantization and pruning help, but the real breakthroughs are coming from architecture innovations specifically designed for resource-constrained environments.

Conclusion

Zero-shot learning has emerged as a powerful paradigm in machine learning, enabling models to recognize objects or solve problems they’ve never encountered during training. As we’ve explored, this approach leverages semantic relationships and knowledge transfer to make predictions about new classes without explicit examples. From language translation to rare disease diagnosis and wildlife conservation, zero-shot learning opens doors to applications where labeled data is scarce or unavailable.

While zero-shot learning offers remarkable flexibility and resource efficiency, implementing effective models requires careful consideration of semantic space design, robust feature extraction, and evaluation strategies. As research continues to address current limitations around semantic gaps and performance variability, the future looks promising. By combining zero-shot approaches with other learning paradigms and leveraging multimodal information, we can build more adaptable AI systems that truly understand the world as humans do. Consider exploring zero-shot learning in your next project—it might be the solution to problems you once thought impossible to solve without extensive labeled datasets.

FAQs

Q1. What is zero-shot learning in simple terms?

Zero-shot learning (ZSL) is a machine learning technique where a model can make predictions about new, unseen data classes without having been explicitly trained on them. It uses semantic understanding or feature relationships to generalize knowledge from known to unknown categories.

Q2. How is zero-shot learning different from traditional machine learning?

Traditional machine learning requires labeled training data for every class it needs to recognize. Zero-shot learning, on the other hand, can classify or understand new classes based on their semantic descriptions or relationships to known concepts, without needing specific training examples.

Q3. Can zero-shot learning completely replace supervised learning?

Not entirely. While ZSL is powerful for generalization and low-data situations, supervised learning still delivers higher accuracy when large amounts of labeled data are available. ZSL is more useful when labeling is expensive or impractical.

Q4. What are real-world examples of zero-shot learning?

Examples include:

- GPT models answering questions they weren’t specifically trained for.

- CLIP identifying new objects using image-text associations.

- Medical imaging systems detecting rare diseases.

- E-commerce platforms recommending new products without historical data.

Q5. What are the main challenges of zero-shot learning?

- Lower accuracy compared to supervised models

- Semantic gaps between training and testing data

- High model complexity and computational demands

- Difficulty in interpretation and debugging

- Potential biases inherited from training data

Q6. What role do prompts play in zero-shot learning?

Prompts are instructions or questions that guide zero-shot models like GPT to perform specific tasks. Effective prompt engineering can drastically improve the performance of zero-shot models in NLP and beyond.

Q7. How does zero-shot learning work in computer vision?

In vision, zero-shot learning often uses shared embedding spaces. Both image features and class descriptions are projected into a common space, allowing the model to compare and match unseen image classes with their semantic descriptions.

Q8. What is the difference between zero-shot and few-shot learning?

- Zero-shot: No examples of the new class during training.

- Few-shot: Very limited examples (e.g., 1–5) are provided for new classes. Few-shot learning is useful when minimal but some labeled data is available.

Q9. Are zero-shot models suitable for deployment on edge devices?

Yes, although it’s challenging. Through techniques like model compression, quantization, and distillation, zero-shot learning is being brought to edge devices for real-time, offline decision-making, particularly in smart home and IoT environments.

Q10. What tools or frameworks support zero-shot learning?

Some popular tools and frameworks include:

- OpenAI’s GPT and CLIP

- Hugging Face Transformers

- Google’s T5 and PaLM

- Facebook’s XLM-R and DINO

These provide pre-trained models with zero-shot capabilities for NLP, vision, and cross-modal tasks.